New Paper: Using ERPs and RSA to examine saliency maps and meaning maps for natural scenes

/Kiat, J.E., Hayes, T.R., Henderson, J.M., Luck, S.J. (in press). Rapid extraction of the spatial distribution of physical saliency and semantic informativeness from natural scenes in the human brain. The Journal of Neuroscience. https://doi.org/10.1523/JNEUROSCI.0602-21.2021 [preprint]

The influence of physical salience on visual attention in real-world scenes has been extensively studied over the past few decades. Intriguingly, however, recent research has shown that semantically informative scene features often trump physical salience in predicting even the fastest eye movements in natural scene viewing. These results suggest that the brain extracts visual information that is, at the very least, predictive of the spatial distribution of potentially meaningful scene regions very rapidly.

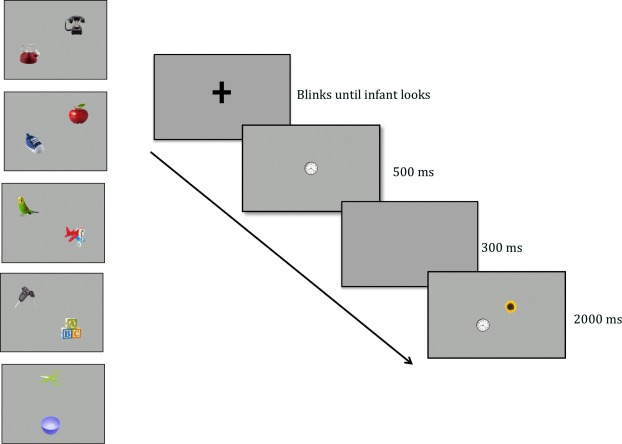

In this new paper, Steve Luck, Taylor Hayes, John Henderson, and I sought to assess the evidence for a neural representation of the spatial distribution of meaningful features and (assuming we found such a link!) contrast the onset of its emergence relative to the onset of physical saliency. To do so, we recorded 64-channel EEG data from subjects viewing a series of real-world scene photographs while performing a modified 1-back task in which subjects were probed on 10% of trials to identify which of four scene quadrants was part of the most recently presented image (see Figure 1).

Figure 1. Stimuli and task. Subjects viewed a sequence of natural scenes. After 10% of scenes, they were probed for their memory of the immediately preceding scene.

With this dataset in hand, we next obtained spatial maps of meaning and saliency for each of the scenes. To measure the spatial distribution of meaningful features, we leveraged the “meaning maps” that had previously been obtained by the Henderson group. These maps are obtained by crowd-sourced human judgments of the meaningfulness of each patch of a given scene. The scene is first decomposed into a series of partially overlapping and tiled circular patches, and subjects rate each circular patch for informativeness (see Figure 2 and Henderson & Hayes, 2017). Then, these ratings are averaged and smoothed to produce a “meaning map,” which reflect the extent to which each location in a scene contains meaningful information. Note that these maps do not indicate the specific meanings, but simply indicate the extent to which any kind of meaningful information is present at each location.

Figure 2. Top: Example scene with corresponding saliency map and meaning map. Two areas are highlighted in blue to make it easier to see how saliency, meaningfulness, and the image correspond in these areas. Bottom: Examples of patches that were used to create the meaning maps. Observers saw individual patches, without any scene context, and rated the meaningfulness of that patch. The ratings across multiple observers for each patch were combined to create the meaning map for a given scene.

The spatial distribution of physical saliency was estimated algorithmically using the Graph-Based Visual Saliency approach (Harel et al., 2006). This algorithm extracts low-level color, orientation, and contrast feature vectors from an image using biologically inspired filters. These features are then used to compute activation maps for each feature type. Finally, these maps are normalized, additively combined, and smoothened to produce an overall “saliency map”. A few examples of meaning and saliency maps for specific scenes are shown in Figure 3. We chose this algorithm in particular because of its combination of biological plausibility and performance at matching human eye movement data.

Figure 3. Examples of images used in the study and the corresponding saliency and meaning maps. The blue regions are intended to make it easier to see correspondences between the maps and the images.

We then used the meaning maps and saliency maps to predict our ERP signals using Representational Similarity Analysis. For an overview of Representational Similarity Analysis in the context of ERPs, check out this video and this blog post.

The results are summarized in Figure 4. Not surprisingly, we found that a link between physical saliency and the ERPs emerged rapidly (ca. 78 ms after stimulus onset). The main question was how long it would take for a link to the meaning maps to be present. Would the spatial distribution of semantic informativeness take hundreds of milliseconds to develop, or would the brain rapidly determine which locations likely contained meaningful information? We found that the link between the meaning maps and the ERPs occurred extremely rapidly, less than 10 ms after the link to the saliency maps (ca. 87 ms after stimulus onset). You can see the timecourse of changes in the strength of the representational link for saliency and meaning in panel A (colored horizontal lines demark FDR corrected p < .05 timepoints) and the jackknifed mean onset latencies for the representational link of saliency and meaning in Panel B (error bars denote standard errors).

Figure 4. Primary results. A) Representational similarity between the ERP data and the saliency and meaning maps at each time point, averaged over participants. Each waveform shows the unique variance explained by each map type. B) Onset latencies from the representational similarity waveforms for saliency and meaning. The onset was only slightly later for the meaning maps than for the saliency maps.

Note that the waveforms show semipartial correlations (i.e., the unique contribution of one type of map when variance due to the other type is factored out). These findings therefore show that meaning maps have a unique neurophysiological basis from saliency.

The rapid time course of the meaning map waveform also indicates that information related to the locations containing potentially meaningful information is computed rapidly, early enough to influence even the earliest eye movements. This is a correlation-based approach, so these results do not indicate that meaning per se is calculated by 87 ms. However, the results indicate that information that predicts the locations of meaningful scene elements is computed by 87 ms. Presumably, this information would be useful for directing shifts of covert and/or overt attention that would in turn allow the actual meanings to be computed.

The data and code are available at https://osf.io/zg7ue/. Please feel free to use this code and dataset (high-density ERP averages for 50 real-world scenes from 32 subjects) to explore research questions that interest you!