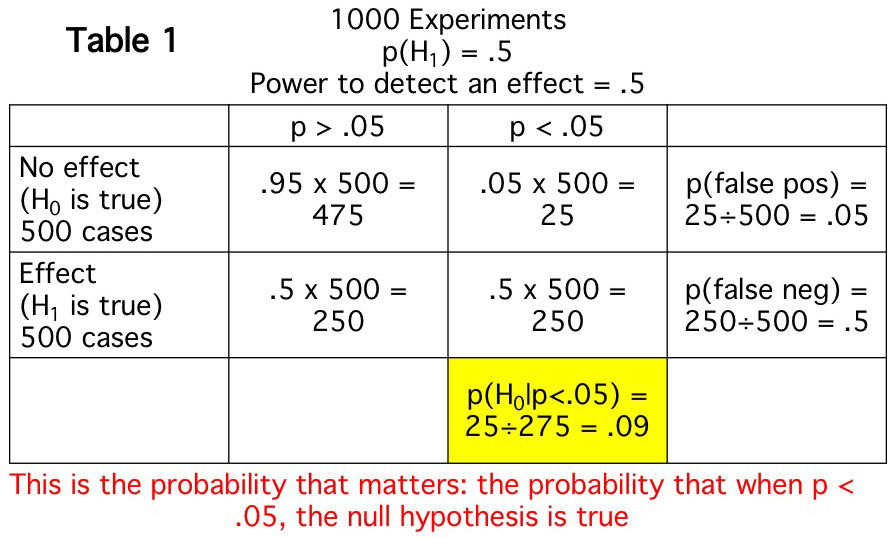

This might not seem so bad. I'm still drawing the right conclusion over 90% of the time when I get a significant effect (assuming that I've done everything appropriately in running and analyzing my experiments). However, there are many cases where I am testing bold, risky hypotheses—that is, hypotheses that are unlikely to be true. As Table 2 shows, if there is a true effect in only 10% of the experiments I run, almost half of my significant effects will be bogus (i.e., p(null | significant effect) = .47).

The probability of a bogus effect is also high if I run an experiment with low power. For example, if the null and alternative are equally likely to be true (as in Table 1), but my power to detect an effect (when an effect is present) is only .1, fully 1/3 of my significant effects would be expected to be bogus (i.e., p(null | significant effect) = .33).

Of course, the research from most labs (and the papers submitted to most journals) consist of a mixture of high-risk and low-risk studies and a mixture of different levels of statistical power. But without knowing the probability of the null and the statistical power, I can't know what proportion of the significant results are likely to be bogus. This is why, as I stated earlier, p values do not actually tell you anything meaningful about the false positive rate. In a real experiment, you do not know when the null is true and when it is false, and a p value only tells you about what will happen when the null is true. It does not tell you the probability that a significant effect is bogus. This is why I've lost my faith in p values. They just don't tell me anything.

Yesterday, one of my postdocs showed me a small but statistically significant effect that seemed unlikely to be true. That is, if he had asked me how likely this effect was before I saw the result, I would have said something like 20%. And the power to detect this effect, if real, was pretty small, maybe .25. So I told him that I didn't believe the result, even though it was significant, because p(null | significant effect) is high when an effect is unlikely and when power is low. He agreed.

Tables 1 and 2 make me wonder why anyone ever thought that we should use p values as a heuristic to avoid publishing a lot of effects that are actually bogus. The whole point of NHST is supposedly to maintain a low probability of false positives. However, this would require knowing p(null | significant effect), which is something we can never know in real research. We can see what would be expected by conducting simulations (like those in Tables 1 and 2). However, we do not know the probability that the null hypothesis is true (assumption 1) and we do not know the statistical power (assumption 2), and we would need to know these to be able to calculate p(null | significant effect). So why did statisticians tell us that we should use this approach? And why did we believe them? [Moreover, why did they not insist that we do a correction for multiple comparison when we do a factorial ANOVA that produces multiple p values? See this post on the Virtual ERP Boot Camp blog and this related paper from the Wagenmakers lab.]

Here's an even more pressing, practical question: What should we do given that p values can't tell us what we actually need to know? I've spent the last year exploring Bayes factors as an alternative. I've had a really interesting interchange with advocates of Bayesian approaches about this on Facebook (see the series of posts beginning on April 7, 2018). This interchange has convinced me that Bayes factors are potentially useful. However, they don't really solve the problem of wanting to know the probability that an effect is actually null. This isn't what Bayes factors are for: this would be using a Bayesian statistic to ask a frequentist question.

Another solution is to make sure that statistical power is high by testing larger sample sizes. I'm definitely in favor of greater power, and the typical N in my lab is about twice as high now as it was 15 years ago. But this doesn't solve the problem, because the false positive rate is still high when you are testing bold, novel hypotheses. The fundamental problem is that p values don't mean what we "need" them to mean, that is p(null | significant effect).

Many researchers are now arguing that we should, more generally, move away from using statistics to make all-or-none decisions and instead use them for "estimation". In other words, instead of asking whether an effect is null or not, we should ask how big the effect is likely to be given the data. However, at the end of the day, editors need to make an all-or-none decision about whether to publish a paper, and if we do not have an agreed-upon standard of evidence, it would be very easy for people's theoretical biases to impact decisions about whether a paper should be published (even more than they already do). But I'm starting to warm up to the idea that we should focus more on estimation than on all-or-none decisions about the null hypothesis.

I've come to the conclusion that best solution, at least in my areas of research, is what I was told many times by my graduate advisor, Steve Hillyard: "Replication is the best statistic." Some have argued that replication can also be problematic. However, most of these potential problems are relatively minor in my areas of research. And the major research findings in these areas have held up pretty well over time, even in registered replications.

I would like to end by noting that lots of people have discussed this issue before, and there are some great papers talking about this problem. The most famous is Ionnidis (2005, PLoS Medicine). A neuroscience-specific example is Button et al. (2015, Nature Reviews Neuroscience) (but see Nord et al., 2017, Journal of Neuroscience for an important re-analysis). However, I often find that these papers are bombastic and/or hard to understand. I hope that this post helps more people understand why p values are so problematic.

For more, see this follow-up post.